|

IMPaSTo: A

Realistic, Interactive Model for Paint |

|

William

Baxter, Jeremy Wendt, and Ming C. Lin Department of Computer Science

University of North Carolina at Chapel Hill {baxter,jwendt,lin}@cs.unc.edu

http://gamma.cs. unc.edu/IMPaSTo/ |

|

Abstract |

|

We

present a paint model for use in interactive painting systems that captures a

wide range of styles similar to oils or acrylics. The model includes both a

numerical simulation to recreate the physical flow of paint and an optical

model to mimic the paint appearance. Our physical model for

paint is based on a conservative advection scheme that simulates the basic

dynamics of paint, augmented with heuristics that model the remaining key

properties needed for painting. We allow one active wet layer, and an

unlimited number of dry layers, with each layer being represented as a

height-field. We represent paintings

in terms of paint pigments rather than RGB colors, allowing us to relight

paintings under any full-spectrum illuminant. We also incorporate an

interactive implementation of the Kubelka-Munk diffuse reflectance model, and

use a novel eightcomponent color space for greater color accuracy. |

|

We

have integrated our paint model into a prototype painting system, with both

our physical simulation and rendering algorithms running as fragment programs

on the graphics hardware. The system demonstrates the model's effectiveness

in rendering a variety of painting styles from semi-transparent glazes, to scumbling,

to thick impasto. |

|

CR Categories: 1.3.4 [Computing Methodologies]: Computer

Graphics-Graphics Utilities; 1.3.7 [Computing Methodologies]: Computer

Graphics-Three-Dimensional Graphics and Realism |

|

Keywords: Non-photorealistic rendering,

Painting systems, Simulation of traditional graphical styles |

|

1 Introduction |

|

Each

medium used in painting has particular characteristics. Viscous paint media,

such as oil and acrylic, are popular among artists for their versatility and

ability to capture a wide range of expressive styles. They can be applied

thinly in even layers to achieve deep, lustrous finishes as in the work of

Vermeer, or dabbed on thickly to achieve almost sculptural impasto effects,

as in the works of Monet. With a scumbling technique, short choppy

semi-opaque |

|

|

|

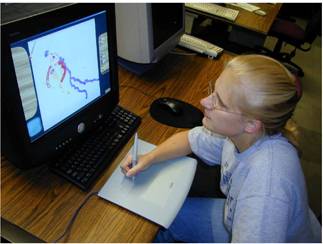

Figure 1: A painting hand-made using

IMPaSTo, after a painting by Edvard Munch. brush strokes create a veil-like haze

over prevIous layers [Gair 1997]. |

|

It is a challenge to design an interactive model that

captures the full range of physical behavior of such paint. Rather than attempt

to simulate paint based on completely accurate physics, we aim instead to

devise approximations which capture the high-order terms, and include

heuristics which model the desired empirical behaviors not captured by the

physical terms. Paint is made by mixing

finely ground pigments with a vehicle. Linseed oil is the

vehicle typically used in oil paints, while in acrylics it is a polymer

emulsion. Both of these are non-Newtonian fluids, and as such, are difficult

to model mathematically. Basic properties of non-Newtonian fluids, like

viscosity, change depending on factors like shear-rate1• Furthermore, the key

feature of interest in painting is how these fluids interact with the

complex, rough surfaces of the canvas and brush, which means the mathematics

must deal with geometrically complex moving boundary conditions and free

surface effects. Finally, in addition to these challenges, a rough

calculation shows the effective resolution of real paint is at least 250 DPI2,

and real paintings often measure many square feet in size. |

|

Paint also features many stunning optical properties. The

observed reflectance spectrum of a paint is due to a complex subsurface scat-

|

|

1The best-known example

of this is ketchup, in which viscosity reduces under high shear rate, e.g.,

when shaken. 2Strokes often exhibit fine scale features on the order of

the width of a single bristle. A typical hair is about 80 microns wide, which

translates to a bare minimal resolution of at least 250 dots per inch (DPI),

and probably 500 DPI or more to ensure an adequate Nyquist sampling rate. |